Join us

The Tech&People group is always on the lookout for enthusiastic candidate members with multidisciplinary backgrounds. In our work, we embed ourselves in the contexts we are designing for (formative studies1), we design and build web2, mobile3, wearable4, robotic5, gaming6, and tangible7 interactive systems, and we evaluate them with target users. If you join our group it is likely that you will work closely with end-users, collaborate with a multidisciplinary team (engineers, designers, data scientists, psychologists, clinicians), build novel and impactful interactive systems, eventually publish and present your work internationally, and see your project being used in real-life contexts.

Note about COVID-19:

The pandemic is taking a toll in everyone’s lives. At Tech&People, we regularly engage with end-users, some of them more fragile. This has obviously impacted the way we do our research. Currently, our evaluations and formative sessions with end-users are performed remotely and we have adapted all our workflows to be done remotely as well, to the extent possible. The group has regular online events (weekly project meetings, reading clubs), and an ongoing virtual laboratory using Discord. Independently of the confinement status, working remotely will always be a choice for those that prefer to do so (with continuous involvement in our virtual communication channels).

Below, you can find the master thesis proposals that the group is offering for the year 2021/2022. Also, we present a set of stories of what members of the group did in their masters or are doing now, with the goal to provide some background to the proposals and an overall view of the opportunities you can find at Tech&People. Lastly, you can find a set of Frequently Asked Questions. If you want to know more, get in touch by e-mail, follow us on Twitter, or check our publications.

MSc Proposals 2021/2022

Motivation

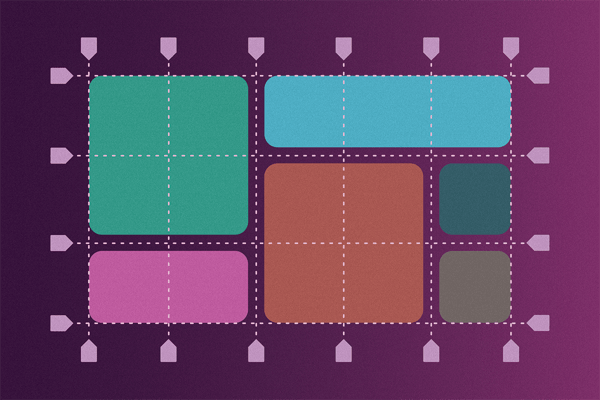

Current software development techniques are guided by designers or developers who are responsible for designing, developing and running tests on the interface. Usually, the consequence is a one-size-fits-all solution aimed at an average user. Many of these solutions and design choices do not take into account the individual preferences and needs. Personalization of interactive systems aims to provide users more control of interface features in order to better meet their needs. This approach has been explored in previous work but struggled to emerge as an accepted practice. In today’s UIs, we can witness support for some of these adaptations (e.g., zooming in and out a webpage, or changing its contrast). However, when users access a webpage or application, the way in which it is adjusted to their abilities and preferences is almost nonexistent.

What you will do

In this thesis, the student will be challenged to explore the benefits and limitations of end-user personalization of web interfaces. Users should be able to change interface attributes such as colors, text, size, or reorder structural elements, for instance. The student will explore different ways to create a user model (personalization, calibration,…). The student will also explore how to transform the webpage styles into constraints that can be merged with the aforementioned user model. In the end, users will be able to use the approach by setting up a proxy or by installing a browser extension and see the adaptations happen in any webpage. This thesis will include the design, development, and evaluation of the approach with end-users. The work will be done in collaboration with researchers from Northumbria University, UK.

Team

Motivation

Mainstream touchscreen technologies such as smartphones and tablets support non-visual access through the use of built-in screen readers (e.g., VoiceOver on iOS and Talback on Android). Screen readers enable visually impaired users to interact with the device either through gestures (e.g., swipes or taps) to navigate between elements or through an Explore by Touch approach, where users drag their finger on the screen and the UI elements are read aloud. While these tools provide access to touchscreen devices, efficiency is limited because feedback is restricted to a sequential audio channel that contrasts with the visual information presented on screen. In this thesis, we aim to take advantage of people’s ability to process simultaneous audio channels – the Cocktail Party Effect, where one can focus the attention on a single voice, but still be able to detect interesting content in the background – to explore solutions to convey multiple audio streams in parallel. We aim to maximize efficiency in touchscreen interaction for blind people, but without affecting their performance.

What you will do

In this thesis, we aim to design, develop, and evaluate auditory feedback solutions that leverage concurrent speech in the context of touchscreen interaction and exploration for visually impaired users. You will be challenged to develop system-wide services for Android devices that enable concurrent speech depending on the user context. As an example, we expect that in some occasions notifications can be read aloud to users in parallel, without interrupting the user’s current task (and feedback). You will conduct user studies early on to engage participants in co-design sessions ensuring user engagement and representation.

Team

Motivation

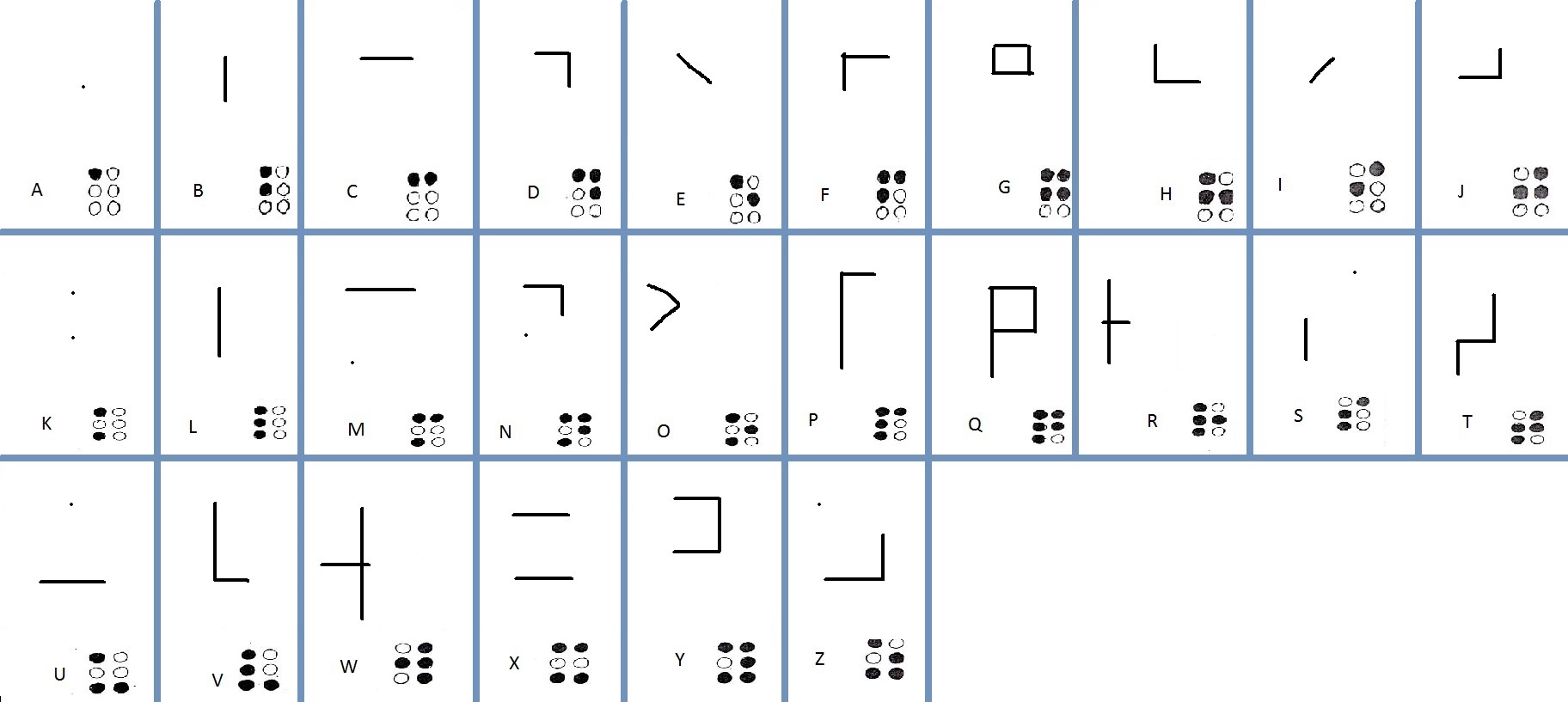

Overall, since the advent of touchscreen mobile devices, numerous input methods have been proposed for blind people. Braille chording approaches have been particularly effective in improving typing speed (see https://techandpeople.github.io/projects/brailleio.html). However, these approaches are normally targeted at a type of device and not adequate for one-hand usage.

What you will do

In this work, you will build a new typing method that enables blind people to write Braille characters in a smartwatch by drawing the shape of the braille cell rather than chording it. First, you will collect a dataset with a broad number of participants; second, you will explore and assess different shape recognizers that are able to identify individual characters. You will integrate a braille spellchecker to improve word-level accuracy. Ultimately, this approach can be tested on other devices. The project will be developed in collaboration with an institution for blind people and researchers from IST and Northumbria University.

Team

Tiago Guerreiro

André Rodrigues

João Guerreiro

Hugo Nicolau

Kyle Montague

Motivation

Several aspects of our lives have been democratized (e.g., social networks democratized access and delivery of information, 3D printers enabled anyone to create and share models for new physical interfaces - Thingiverse). Conversely, one crucial aspect remains out of bounds to individual agency: the User Interface. Today, we are still stuck with the interfaces developers/designers thought to be the ones that best served our/their interests.

What you will do

In this thesis, you will build a platform that is able to create changes to webpage styles. These changes will be stored on top of a github project enabling these new designs to be used by others, shared, and forked. This approach will also enable changes to the base UI to be accounted for and propagated to subsequent repositories enabling the long-term usage of the designs. We have already explored having a browser extension that is able to edit webpages and store design changes. This project will be able to grow that proof of concept to be a full-fledged community platform. The work will be done in collaboration with Northumbria University

Team

Motivation

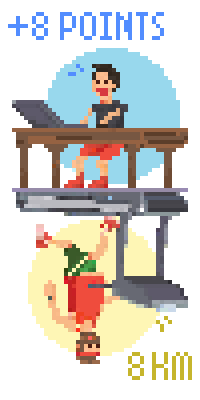

Gaming has the potential to prompt feelings of togetherness through a shared activity, based on challenging goals and immersive interaction. However, finding the time, or common interest within family members is often hard to impossible, limiting the opportunities for a sense of shared play.

What you will do

In this thesis you will be challenged to create new opportunities for family members to contribute to each other play (e.g. Parents and Children). You will explore how to design asymmetric games that harvest everyday tasks from one family member as a contribution to the game of another. For example, can parent’s work productivity influence a child’s game, or a child’s study hours influence a sibling’s play? What are the implications of these dynamics? This work will explore proof-of-concepts scenarios and evaluate them with users. This work will be done in collaboration with researchers from KU Leuven, Belgium.

Team

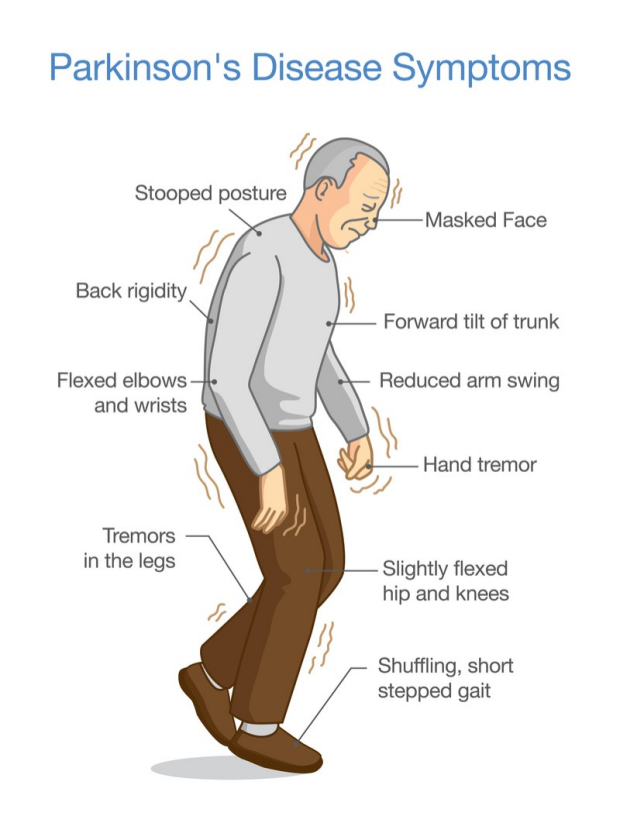

Motivation

Gait has been extensively explored as a marker for movement disorders. There are several projects collecting datasets from participants through wrist accelerometry and seeking to classify gait instances, and derive measures that can help monitoring disease fluctuations. However, people with Parkinson’s disease have very individualized gait patterns (and fluctuations) and a generalized approach is sub-optimal.

What you will do

In this thesis you will be challenged to explore a personalized approach for gait identification, with an approach focused on real-world usage. First, you will design and develop an application to support the collection and annotation of gait data. Second, you will explore different approaches to identify gait instances and derive measures (e.g., step length) from them. The project will be done in collaboration with Campus Neurológico Senior and, if successful, will be included into the Datapark platform (developed by us and used at this center for 3+ years).

Team

Motivation

Robots have been a popular research tool in education because they are attractive and relevant to learn complex concepts. The use of robots increases engagement in social and collaborative actions which may also effectively impact children’s social development and learning. Besides the fact that robots are now widely available and affordable, minorities are still set apart, and we have still not reached a state where visually impaired children can autonomously interact with a robot. Also, the potential of maker technologies (DIY) to support new forms of interaction and understanding of technology may motivate and empower children in the creation of their own technology (eg, robot). Although HCI is growing in accessible making technologies for people with visual impairments, they are not yet accessible for children.

What you will do

In this project, you will focus on co-designing, with educators and children with mixed-visual-abilities, inclusive environments for situated making and introductory programming. The goal will be to create accessible making tools where children build modules to create a robot that interact with other elements and with a map where they can use programming concepts while having their own project and tangible outcomes. You will design a DIY module to afford the creation of a robot and other interactive elements and deploy it in an inclusive school and assess its impact (optionally, depending on time and confinement restrictions, deployments can be individual and shipped to the children’s home, with their parent’s cooperation).

Team

Motivation

Orientation and Mobility (O&M) can be defined as a set of concepts, skills and techniques that enable people with vision impairments to travel an environment safely and independently. Orientation refers to people’s ability to position themselves in the environment, reflected in their awareness of where they are and where they want to go, while mobility refers to people’s ability to move independently from one place to another in a safe, effective and efficient manner. These two interlinked concepts play a very important role in the lives of people with vision impairments in general and are extremely important to children, as the ability to travel independently provides access to a wide range of activities that enable people to participate in society.

What you will do

In this thesis, we aim to investigate current methodologies for O&M training and to design, develop and evaluate novel technological solutions to improve its effectiveness and engagement. We see opportunities for technology to further support O&M training activities both during and after classes with O&M specialists/teachers. Potential areas of research (you may suggest others) that can be explored in this thesis are (one or a combination of): - Virtual Reality to improve immersion by replicating auditory and/or tactile cues of the real-world. - Tabletop Robots to improve map understanding , route-based learning, among others. - Games to improve engagement in learning O&M concepts, techniques, and skills. You will conduct user studies early on to engage O&M specialists and blind children in co-design sessions ensuring user engagement and representation. This work will conclude with a user study evaluating the technological solution developed.

Team

Motivation

People with vision impairments are able to navigate independently by using their Orientation and Mobility skills and their travel aids - white cane or guide dog. However, navigating independently in unfamiliar and/or complex locations is still a main challenge and therefore blind people are often assisted by sighted people in such scenarios. While navigation technologies such as those based on GPS (e.g., Google Maps) can help, their accuracy is still too low (e.g., around 5 meters) to fully support blind users when navigating in unknown locations. On the other hand, indoor locations do not support GPS and generally do not have a navigation system installed.

What you will do

Our goal is to use data from the crowd (other people who walked the same areas before) to learn more about the environment and be able to provide additional instructions to blind users. A possible approach is to use smartphone sensors to estimate possible paths after an individual enters a building. While the GPS may inform us that users are entering/inside a building, large amounts of data (crowd-based) from smartphone sensors may give us information about possible paths and obstacles to instruct blind users. For instance, one may learn that after the entrance there is a path going left and after approximately 10 meters there are stairs to go up one floor; and another path that goes forward (and so on). While the data from a single user may be erroneous, we plan to use data from many users to make such an approach more robust.

Team

Motivation

The COVID-19 pandemic had a huge impact on how technology is used in the care of patients with Parkinson’s disease (PD). After recovering some normality, there are several benefits to harvest. For example, telemedicine enables people in rural areas to have access to specialists more regularly. Also, it enables a triage of those that really need to go to a physical appointment. Videoconference tools, like Zoom, were the tools of the trade for remote appointments in the last year. Although they enable video conversation, they could be tweaked to better support the doctor in assessing the patient.

What you will do

In this thesis, you will be challenged to enrich a telemedicine videocall with interaction and monitoring capabilities on the patient and doctor side for a more effective appointment. First, you will characterize the type of assessment performed by neurologists during a routine appointment. Then, you will build plugins that enable the patient to perform tasks (e.g., following targets with their eyes, select or point to targets) and the doctor to have more information on those and other tasks (e.g., measure head movement, pupil size, hand stability) through audio and video analysis.

Team

Motivation

Typing on a touchscreen has the potential to be a clinically relevant biomarker. It involves motor, perceptual, and cognitive functions. Also, it is something we do constantly throughout the day making it a good candidate for granular assessment of the user state. Recently, we built a keyboard toolkit that is able to devise metrics related to the textual and touch behaviours of participants, without compromising user’s privacy (see https://techandpeople.github.io/wildkey) .

What you will do

In this thesis, you will be challenged to explore the potential for typing data as a digital phenotype, applied in several case studies. First, you will focus on exploring which measures have potential to be explored in specific diseases (e.g., Dementia, Parkinson’s, ALS). Then, together with clinicians you will explore the design of usable reports (i.e. rich visualizations) that enable the quick assessment of changes. Ultimately, you will explore the classification of disease stage from typing behaviours. The project will be developed in collaboration with clinicians from national and international institutions.

Team

Tiago Guerreiro

André Rodrigues

André Santos

Kyle Montague

Hugo Nicolau

Motivation

Webpages are frequently not adapted to users’ characteristics, and specifications for accessibility of Web pages do not necessarily guarantee a usable or satisfying Web experience, particularly for people with disabilities. In today’s UIs, we can witness support for some adaptations (e.g., zooming in and out a webpage or changing its contrast), however browsers and websites provide very restrictive options that are still manually controlled by users. When users open a webpage or application, the way in which it is adjusted to their abilities and likings is almost nonexistent.

What you will do

In this thesis you will be challenged to investigate how to adapt interfaces based on user (and other) users past interactions. The goal of this thesis is twofold: 1) automatically create user models based on user’s interactions with webpages; and 2) use that knowledge to adapt the interfaces. First, the student will create a browser extension that is able to harvest interactions and model how people interact with each webpage and its widgets. The student will explore anomaly detection approaches to understand when a user is being ill-served by the interface and suggest when a different interface element or interaction mechanism can be used for a specific user to be more efficient with the task at hand.

Team

This proposal is already assigned to a specific student.

Motivation

Entertainment as a whole in modern society, started to be recognized as a fundamental part of our lives and well-being. Gaming has a long list of potential benefits including coping with anxiety, social bonding or as a creative outlet. While there is a vast array of options available for playing together, players are very limited in the experiences they are able to share when there is a significant difference in skill, ability, gaming tastes among others.

What you will do

In our group we have been exploring leveraging asymmetric game roles to create opportunities for shared play. In this topic you will have the opportunity to delve deeper into the design of Asymmetric Games and tackle one of the three challenges: 1) Competitive Game for Mixed Visual Ability; 2) Balancing Differences in Skill; 3) Ensuring Engagement & a Sense Shared Play

Team

This proposal is already assigned to a specific student.

Motivation

Virtual reality is an emerging technology that is slowly becoming available to the masses at affordable prices. VR is currently used in a variety of contexts: gaming, education, shopping, social spaces, employee training, to name a few. As with any emerging technology, it is fundamental we ensure its accessibility among people with different abilities. One of the major challenges blind people face in virtual environments is to navigate/move in the virtual space. While prior work has focused on mimicking real-world techniques, such as a virtual white cane (due to user familiarity), in virtual reality there are many locomotion techniques that vary greatly from application to application (e.g. free teleportation, walk in place, analog stick, directional dashes, waypoint navigation). In addition, blind users in virtual environments will not have the same restrictions as in the real world, nor the restrictions sighted people have due to a lack VR sickness (similar to motion sickness due to visual stimuli). We argue that this combination provides an opportunity to explore novel/fantastical mobility methods that are not possible otherwise.

What you will do

In this thesis you will be challenged to design, develop, and evaluate novel navigation techniques in VR for blind people. You will conduct user studies early on to engage participants in co-design sessions ensuring user engagement and representation. This work will conclude with a user study evaluating the developed set of navigation techniques.

Team

This proposal is already assigned to a specific student.

Motivation

The covid-19 pandemic has changed the way scientific conferences and meetings in general are done, moving from presencial to virtual interactions. While we hope the pandemic will be over soon, such virtual events - or at least hybrid - are likely to be more prevalent in society in the future. Overall, event organizers and attendees have been able to adapt to this shift, and strived to make use of immersive environments that try to replicate real-world interactions. However, such environments are not accessible and end up excluding people with disabilities (e.g., blind people or deaf and hard of hearing). In this thesis, we aim to build an inclusive virtual conference/meeting environment, built on top of the Jitsi platform, that provides an immersive and accessible experience for people with different abilities.

What you will do

In this thesis you will be challenged to design, develop, and evaluate a novel platform for virtual meetings, built on top of an existing virtual conferencing tool (Jitsi). You will conduct user studies early on to understand the requirements of such a tool, ensuring user engagement and representation. This work will conclude with a user study evaluating the developed platform.

Team

João Guerreiro

Tiago Guerreiro

André Rodrigues

David Gonçalves

STORIES

Diogo Marques studied the phenomenon of social insider attacks to personal mobile devices, resorting to anonymity-preserving large-scale online studies, in his PhD. His work won awards at the most reputable usable security conference, and attracted intense media attention, with a highlight to a DailyShow sketch (from minute 3:05). During his PhD, he spent 4 months at IBM Research NY, doing an internship. He is now a user researcher at Google Munich.

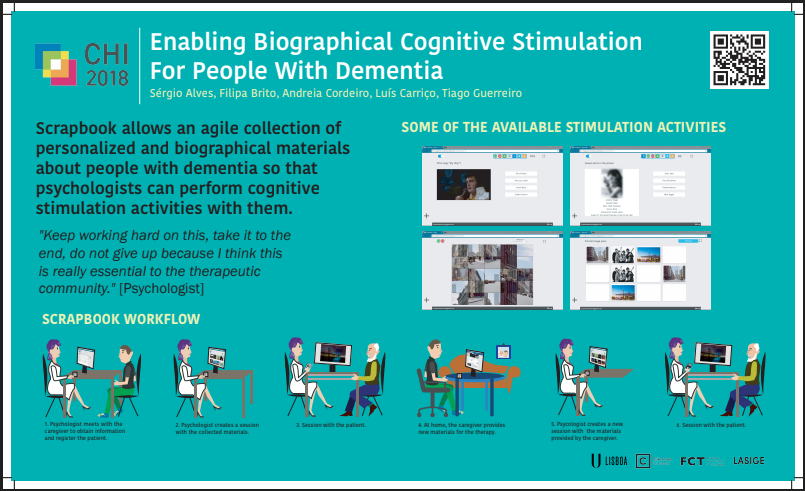

Sérgio Alves performed his master thesis in 2017 when he developed Scrapbook, a web platform to enable agile biographic reminiscence therapy, which was used for more than a year in national clinical institutions and care homes (that he presented at the WebForAll conference in San Francisco). Before starting his PhD, he was hired as an engineer in the group to maintain and improve Scrapbook, and was then hired by the Openlab (UK) to maintain the collaboration with Tech&People. He is now researching citizen-led experimentation of user interfaces (advised by Tiago Guerreiro and co-advised by Kyle Montague, Openlab, UK).

André Rodrigues performed his master thesis in 2014 working on system-wide assistive technologies. In this work, besides a novel tablet two-handed text-entry method for blind people, he was able to provide a mobile phone access solution to Miguel, a tetraplegic blind young man. He presented his MSc work at BCS HCI in Southport, UK and at CHI (the best international conference in Human-Computer Interaction), in South Korea. After that, he pursued his PhD (LASIGE’s and DI’s Best PhD Student Award 2 times in a row), focusing on human-powered solutions for smartphone accessibility. From his many contributions, we outline TinyBlackBox, a Android mobile logger for user studies that enabled a longitudinal study with blind people, and has been integrated in the popular AWARE framework. During his PhD, he spent 3 months at Newcastle University, where we collaborated in the development of an IVR health system that is now being explored in a variety of contexts. He is now a PosDoc scholar in the group focusing on accessibility, gaming, and virtual reality.

Diogo Branco performed his master thesis in 2018 where he focused on extracting metrics from wrist-worn accelerometers and designing usable free-living reports for neurologists and patients with Parkinson’s Disease. The platform has been used since then for a period over 18 months (and going). He presented the outcomes of his MSc at CHI 2019 in Glasgow, and started his PhD in 2019. His work enabled ongoing service contracts with pharmaceuticals and the usage of wearable sensors in clinical trials.

Hugo Simão is an industrial designer doing his master thesis on robots to support people with dementia. In this process, he designed and developed MATY, a multisensorial robot that is able to project images, emit fragrances, sounds, and walk around autonomously. He published his preliminary work at CHI 2019, in Glasgow, and other parallel projects on robots for older adults (e.g., Carrier-pigeon robot at HRI 2020). Hugo is starting his PhD in the group in the next semester, and was accepted for a 6-month internship at Carnegie Mellon University (postponed due to Coronavirus).

David Gonçalves is a current master student (started in September 2019) in the group working on asymmetric roles in mixed-ability gaming. Until now, he performed formative studies with blind gamers (and gaming partners), trying to understand their current gaming practices, and has developed his first mixed-ability game using Unity. He submitted his first full paper to an international conference (under review), and joined Tiago in a visit to Facebook Research London, where they were invited to present their work at the Facebook first Research Seminar Series.

Ana Pires is a Postdoc scholar at Tech&People. She is a psychologist working on Human-Computer Interaction, with a particular focus on inclusive education. In her work, she explores how tangible interfaces improve how children learn, for example, mathematical or computational thinking concepts. In the group, she has been working with Tiago and master students to develop solutions for accessible programming, among many other projects.

Frequently Asked Questions

I am considering doing a thesis in the group but I am not sure I have the technical skills required. Am I required to know how to program wearables, develop games, know computer vision, or how to resort to machine learning algorithms?

We are a human-computer interaction research group with a strong technical component. Besides working with end-users, we build robust user-facing interactive systems to be evaluated in realistic settings. However, the group already has a set of skills that enables newcomers to have a swift onboarding experience. When needed, we provide internal workshops, pair master students with more experienced researchers, and provide constant support. The group also includes designers, engineers, and a psychologist, to provide support to projects that go beyond the expected knowledge of a student.

What are the future prospects for a student doing a master’s with your group?

User research and user interface development are of the most desired expertise in the market of today. If you search for job offers, you will consistently find user research positions among the most well paid. Our graduates, at the end of their masters, have only faced the challenge of selecting which offer they preferred. While some were eager to join the portuguese market (e.g., Feedzai, Farfetch), we also have ex-master students staying with us as engineers with competitive contracts, several joining us for a PhD, and some going abroad (e.g., Google, Newcastle University).

I want a scholarship. Do you offer those?

The group is currently engaged in four european projects, 2 national projects, and several service contracts. Most of our students are supported by scholarships in those contexts. All students have the opportunity to apply to the LASIGE scholarships; at the end of those scholarships, according to how the work is going, some students are offered a continuation of their financial support. Some projects are supported from the start, if they are developed in the context of a funded project task. From the stories above, all students have been fully supported during their studies. In our current team, more than 50% of the students are supported by scholarships.

What’s the deal with conference travels and internships? Do I have to pay for those?

No. Conference publications are fully supported by the group (travel, hotel, registration, per diem) when submission to the conference is agreed with Tiago. Internships are normally arranged with Tiago and the visited institution, and are also fully covered by funding schemes (e.g., Erasmus+) or by the visited institution/company.

I want to work in the X project but I will need Y technology and a PC with special hardware. Do you have those?

If a proposal requires specific tech/materials, it will be provided, including computers/laptops to work with them (if needed). The group already has access to a wide set of resources like eye-tracker, mobile devices, physiological computing kits, IoT kits, commodity robots, bluetooth beacons, VR headsets, as well as cloud computing, panel, and transcription services.

How can I know more about the group?

You can check our publications, team and research pages, to have an idea of our published work and mission. We also invite you to check our lab memo to have a feeling of how the group operates. Follow us on Twitter. And, for any other business or more detail, come talk to us.

What do you expect from your students?

The group welcomes motivated strong-willed students to pursue impactful work. While it is expected that getting a position will be competitive in regards to a student’s background, we are mostly looking for people that are motivated to work and to make a difference. If you just want to finish your masters in the easiest way possible, this is not the group you should be applying for. Conversely, if you are eager to learn a variety of skills, be challenged, be exposed to real life problems, and seek for fair and effective solutions to solve them, come work with us.